Joint compilation: Gao Fei, Blake

Editor's note: It is generally believed that the 1956 Dartmouth Conference laid the foundation for artificial intelligence, and this year coincided with the 60th anniversary of the birth of artificial intelligence. What is rarely known is that the term “artificial intelligence†was first proposed a year ago on August 31, 1955. Today is exactly the 61st anniversary of this concept being proposed. From the birth of this concept to the birth, what is the story of "October Pregnancy"?

On August 31, 1955, the term "artificial intelligence" first appeared on a seminar proposal that lasted two months and only 10 people participated. Proponents include John McCarthy (Dartmouth College), Marvin Minsky (Harvard University), Nathaniel Rochester (IBM), and Claude Shannon (Bell Telephone Laboratories) among others. The Dartmouth Summer Symposium (1956) later this year is widely considered to be the day of artificial intelligence research.

In this proposal, the definition of "artificial intelligence" is given:

Try to find ways to make machines use languages, form abstractions and concepts, solve problems that humans cannot solve today, promote themselves, and so on. The first issue for the current artificial intelligence is to make the machine behave like a human being.

And the results you hope for:

"We believe that if a group of outstanding scientists work together for a summer vacation, they will be able to make a significant progress in these issues (one or more)."

This conference proposal put forward the direction of research and reflection in the direction of artificial intelligence. After nearly a year of brewing, the discussion at the Dartmouth conference in 1956 has aroused the attention and resonance of the computer science community, thus announcing the "artificial The birth of this emerging discipline of intelligence. For the proposal of this conference that has guiding significance for the development of artificial intelligence, Lei Fengnet compiled it as follows:

J. McCarthy, Dartmouth College

ML Minsky, Harvard University

N. Rochester, IBM Corporation

CE. Shannon, Bell Telephone Laboratory

1955.8.31.

We propose to hold a two-month, 10-person AI workshop at the Dartmouth College in Hanover, New Hampshire, during the summer vacation next year (1956). Our research is based on the conjecture that every aspect of (human) learning or any feature of intelligence can be accurately described in principle and can be used by machines to simulate learning and intelligence. Our research is based on this conjecture. We will try to find ways to make machines use languages, form abstractions and concepts, solve problems that humans cannot solve today, promote ourselves, and so on. We believe that if a group of outstanding scientists work together for a summer vacation, they can make a significant progress in these issues (one or more).

Here are some of the questions we think about artificial intelligence:

1. Automated computer

If a machine can work, an automated computer can be programmed to simulate machine work. The running speed and memory capacity of existing computers may not be able to support many advanced functions that simulate the human brain. However, the main bottleneck is not the small size of the machine, but because we cannot write enough computers to simulate the human brain. A powerful program with advanced features.

2. How to program so that the computer has the ability to use the language?

According to speculation, most of human thoughts are obtained by using vocabulary through reasoning and imagination. Based on this point of view, it can be concluded that human language inductive induction ability enters mental vocabulary by allowing some linguistic rules implied by a new vocabulary and some sentences containing the vocabulary, or some linguistic rules implied by other sentences. Found in the library. However, no one has accurately explained this point of view, nor has it listed examples of related language applications.

3. Neural network

How to design a group of (presumed) neurons so that these neurons can generate concepts? On this subject Uttley, Rashevsky and his team, Farley and Clark, Pitts and McCulloch, Minsky, Rochester and Holland and other researchers have made a lot of theoretical research and experimental research. Some research results have been obtained, but in order to solve this problem still need a lot of theoretical research support.

4. Computational scale theory

Assuming that we want to solve a very good problem (need to rigorously test the validity of a propositional answer), one solution is to test all the answers at once. This kind of scheme is inefficient, however, in order to exclude this solution, we need to make some standards about the efficient calculation method. We have considered that in order to measure the efficiency of a computing method, it is necessary to develop a set of methods to measure the complexity of computing equipment. To formulate such a method, we need to put forward a theory about the complexity of the function. Shannon and McCarthy conducted research on this issue and obtained some results.

5. Self-improvement

A truly intelligent machine may perform some activities. These activities can, to a large extent, become self-improvements. In order to achieve this goal, some proposals have been made and deserve further study. This problem can also be studied at an abstract level.

6. Abstract concept

A large number of "abstract concept" types can be clearly defined, and there are also some "abstract concept" types that are difficult to unambiguously define. It is worth trying to divide these "abstract concept" types directly and describe machine methods that abstract the concept from sensory data or other data sources.

7. Randomness and creativity

For the difference between creative thinking and the lack of imagination and subjective decision-making power, we have a very tempting, but not perfect idea, that the difference between the two lies in the randomness. This randomness comes with intuition and is efficient. In other words, in general, restricted randomness is a product of orderly thinking, but guessing or premonition that comes into consideration includes such randomness instead.

In addition to the above-mentioned issues for research jointly proposed by us, we also invite individuals who participated in the project to present their respective research priorities. See the appendix for the speeches of the four sponsors of this project.

The sponsors of this conference proposal are as follows: CE Shannon, ML Minsky, N. Rochester, J. McCarthy. The Rockefeller Foundation provided related cost support for this project.

I hope my future research will focus on one or two research topics listed below.

1. Apply the concept of information theory to computing devices and brain models. One of the major problems in information theory is the reliable dissemination of information through a noise channel. There is a similar problem with computing machines that uses unreliable elements for reliable calculations. Regarding this issue, von Neumann, Shannon and Moore have done research, but there are still some open issues that need to be solved. With regard to several elements, the development of concepts similar to the capacity of the channels, the analysis of the upper and lower limits of required redundancy, and other issues are listed as important issues. Another problem is related to the theory of information networks in which information is circulated in many closed loops (this kind of information flow is in contrast to the simple, single-channel approach to information flow that communication theory generally advocates). In the closed circle, the problem of information flow delay becomes an important research object, and it is necessary to propose a completely new approach. When part of the past information of a set of information is known, this involves conceptual issues such as local entropy.

2. An environment-brain model that matches the robot. Usually a machine or animal can only operate in a limited environment or can only adapt to a limited environment. Even a complex human brain initially adapts to some simple features of its existing environment and gradually adapts to other complex environmental features. I propose to study the brain model synthetically by studying the parallel development of a series of matching (theoretical) environments and corresponding brain models. The focus of the study is to classify the environment model and use a mathematical structure to represent the model. Often when discussing mechanized intelligence, we think of machines that can perform advanced human thinking activities such as demonstrating certain principles, creating music, or playing chess. Here, I propose that starting from a simple environmental model, when the environment becomes favorable (it is only irrelevant) or not so complicated, it begins with a series of simple models and slowly moves towards the study of advanced activities.

It is not difficult to design a machine with the following learning capabilities. Equipped with input and output channels for the machine, and an internal method that provides various output responses for input information, the machine can be trained by trial and error and obtain a series of input and output functions. Such a machine, if placed in a suitable environment and given a set of "success" or "failure" metrics, can be trained to demonstrate a set of "target exploration" behavioral patterns. Such a machine intelligence can develop slowly in a complex environment, and usually it will not have a high-level behavior model unless it is provided to such a machine, or the machine itself can develop and abstract sensory data.

Today, the criteria for judging success should not be limited to producing specific patterns of activity as designers expect in the output channels of machines, but should include the performance of a particular operation in a particular environment. To a certain extent, the situation of the power machine presents a two-part sensory situation, and when the machine has the ability to integrate the set of “dynamic machine abstractions†associated with its output activities and environmental changes, it can quickly achieve success.

Over a period of time, I have made relevant research on such systems and believe that if the sensory abstraction of the designed machine satisfies certain relationships with the power machine abstraction, this machine will have the ability to demonstrate a higher level of behavioral patterns. If the corresponding power machine behavior actually occurs, these relationships involve pairing, power machine abstraction and sensory abstraction, which will form new sensory situations that represent the pre-defined environmental changes.

The important results sought are: According to the environment characteristics, the machine itself can establish an abstract environment model. If difficulties are encountered, the machine first searches for answers from the internal abstract environment model before attempting external experiments. Given these initial internal studies, external experiments will become more flexible, and the behavioral models exhibited by machines will be seen as highly "imaginative."

In my dissertation, I will try to explore how the machine simulates human behavior patterns and will work harder in this direction. I hope that by the summer of 1956, I was able to design such a smart machine that was very close to the computer programming stage.

Originality of machine performance

In the process of programming an automated computer, in general, we should set a series of guidelines for the machine to handle any unexpected events during the operation. We expect that the machine will follow the set criteria to a great extent and demonstrate non-original or common sense. In addition, when the machine operates in a disorderly situation, the designer also feels bored because the principle he sets for the machine itself is somewhat contradictory. Finally, in the process of programming a machine, designers often struggle to deal with the problems they encounter. However, when the machine has a little intuition or can reasonably reason, the machine itself can directly find the problem. The concept described in this article is as follows: How to enable the machine to demonstrate a more sophisticated high-end behavior pattern in the above-mentioned broad areas. The issues discussed in the article I have also been more or less involved in this five-year period, I hope that the research in this area will make progress in the next summer's artificial intelligence project.

The process of invention or discoveryLiving in our cultural environment allows us to solve many problems. It is still not clear how the process described above is performed, but I will discuss this aspect of the issue in accordance with the model proposed by Craik. Craik suggested that the psychological effects are mainly formed by building small engines in the brain that can simulate and predict the abstract concepts related to the environment. Therefore, the solution to this problem can be listed as follows:

1. The environment can provide data and form certain abstractions based on the data provided.

2. These abstract concepts are provided with some inner habits or motivations:

3.1 Provide a definition of the problem with respect to the environment in which the future expectation will be achieved, ie set a goal.

3.2 A suggested course of action to resolve this issue.

3.3 Stimulate the engine in the brain that matches this situation.

4. The engine will then predict what the environmental characteristics and proposed action plan will lead to.

5. If the predicted result matches the target, the individual will continue to act in accordance with the indicated plan.

At present, the most practical machine solution to this problem is the expansion of the Monte Carlo method. Problems that can usually be solved with the Monte Carlo method are always misunderstood situations, and there are many possibilities. In the process of obtaining the analysis plan, we cannot determine which factors to ignore. Therefore, mathematicians use machines to perform thousands of random experiments. The experimental results provide a rough guess about the answer. The extension of the Monte Carlo method is the use of these results as a guide to determine which factors to ignore to simplify the problem and obtain an approximate analytical solution.

Some people may ask why this method also contains randomness. Because, for the machine, it is necessary to use randomness to overcome the insufficient considerations of programmers and overcome their prejudices. Although the necessity for this method to contain randomness has not yet been confirmed, there is currently ample evidence to support its necessity.

Random machineIt is not feasible to program an automated computer to be original, but to introduce randomness without using a sight. For example, when a designer writes a program that causes the computer to generate random data for every 10,000 steps and operate it as an instruction, the result will be confusion. When there is a lot of confusion, the machine may try some prohibited instructions or execute stop instructions. In this case, the experiment will be aborted.

However, there are two reasonable ways to address the above issues. First, find out how the brain successfully handles chaotic operations and replicates brain functions. Second, use some examples of problems that require finding original answers, and try to program on an automated computer to solve these problems. Any of the two methods may be successful. However, it has not been possible to determine these two methods, which are faster and shorter. My work in this area of ​​research focuses mainly on the former method, because I think that in order to solve this problem, it is best to be able to master all relevant scientific knowledge. I have realized the current state of these computers and realized that the machine is programmed. The artistic charm.

The control mechanism of the brain is obviously different from the computer control mechanism of today. One of the differences is in the way of failure. The failure of a computer is mainly reflected in the output of unreasonable results. Storage errors or data transmission errors largely go beyond the data plane. A control error will result in any result, an incorrect instruction or an erroneous input-output unit may be executed. On the other hand, human language mistakes may produce still logical, plausible language output. It may be that the brain mechanism is such that a little error in reasoning will make the randomness generated develop toward a reasonable and correct direction. Perhaps the mechanism that controls the sequence of behaviors can guide such random factors, ultimately improving the effectiveness of the imagination process under completely random conditions.

Some research has been devoted to simulating neuronal networks on our automated computers. The focus of this study is to enable the machine to form and operate concepts, with abstraction, generalization, and naming capabilities. Research has been conducted on the brain mechanism. The first phase of the experiment was mainly to amend certain details of the theory. The second phase is underway. It is expected that by the summer of next year, this study will be successfully completed and the final experimental report will be completed. It is still too early to predict which stage of my experiment will be carried out next summer. However, the main research question I will continue to explore in this paper is: “How to design a machine to ensure that it will show itself in solving problems. Originality?"

Language and intelligenceIn the artificial intelligence seminar to be held next summer, I propose to study the relationship between language and intelligence. Obviously, applying trial-and-error methods directly to the relationship between sensory data and power machine activity will not help the machine learn more complex patterns of behavior. On the contrary, it is necessary to apply trial and error to a more abstract level. Obviously, the human brain uses language as a means to deal with complex phenomena. Higher levels of trial and error are usually presented in the form of ideas and verification concepts. The large number of linguistic features possessed by the English language is lacking in every official language described so far.

1. Can accurately describe the arguments in English that are supplemented by informal mathematics.

2. The breadth of English applications allows this language to absorb and make reasonable use of any other language.

3. English-language users can use English to refer to themselves and revise their own presentation based on their own progress in solving the problems encountered in the research process.

4. In addition to the rules of proof, English, if fully expressed in mathematics, can form speculative rules.

The current logical language has been formed into a list of instructions that allow computers to perform advanced calculations or to form part of formula expressions in mathematics. The latter has been constructed to facilitate:

1. A simple description using informal mathematics.

2. Allow translation of statement expressions from informal mathematical expressions into languages.

3. Discuss a certification process.

At present, no one has attempted to make the proof process in the artificial language as brief as the proof process in informal mathematics. Therefore, it is worth trying to construct a computer-programmed artificial language to solve the problem of guessing and self-reasoning. This artificial language should be consistent with English, that is, a brief English statement on a specific topic should correspond to a short statement in the artificial language. Therefore, the short point of view in English should correspond to a short guessing viewpoint in artificial language. I hope to be able to work out an artificial language with these characteristics and include concepts like physical objects, events, etc. I also hope to use this artificial language to program machines so that machines can play games like humans and perform other tasks possible. .

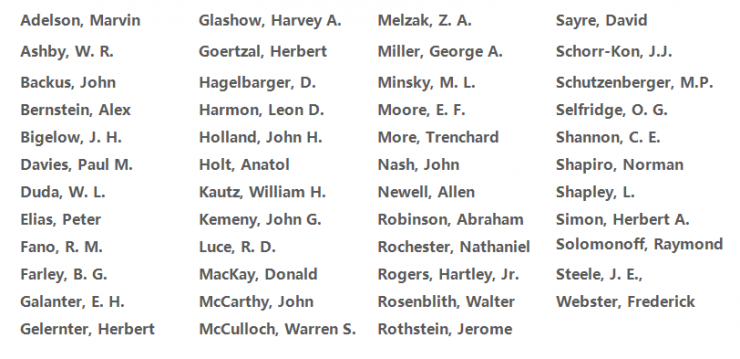

The following is a list of people who will attend the Dartmouth seminar next year and who are interested in this research project:

postscript:

On July 12-15, 2006, the Dartmouth Artificial Intelligence Conference was held. The theme of the conference was "The next 50 years (AI@50)" and it was to commemorate this event. The 50th anniversary of the "Dartmouth Conference". Five of the ten original scientists participated in the conference. They were: Marvin Minsky, Ray Solomonoff, Oliver Selfridge, Trenchard More, and John McCarthy. President of the conference James Moor mentioned in the summary report published by AI magazine:

"It is difficult to find out the starting date of any progress, but the Dartmouth summer research project of 1956 is often considered to be the beginning of AI as a research area. John McCarthy, was Dartmouth's A professor of mathematics, he was disappointed with his paper on collaboration with Claude Shannon (published on Automata Studies) because it did not mention more about the possibility of computers acquiring intelligence. Therefore, at John McCarthy, Marvin In a proposal written by Minsky, Claude Shannon, and Nathaniel Rochester for the 1956 Symposium, McCarthy wanted to further clarify the concept. He was also considered to be the creator of the term “artificial intelligence,†and he set the direction for research in this area. Under the assumption, if "computational intelligence" or any other possible word is used at the time, will research in the field of artificial intelligence be different now?"

Five scientists in the 1956 original project participated in AI@50, and they all recalled the past.

McCarthy believes that the 1956 project did not meet initial cooperation expectations. Participants did not arrive at the same time and basically remained on their own research agenda. However, McCarthy emphasized that there are still many significant research advances during the project, especially Allen Newell, Cliff Shaw, and Herbert Simon's Information Processing Language (IPL), as well as logical theory machines;

Marvin Minsky mentioned that although he studied neural networks as his dissertation a few years before the 1956 project, he interrupted the work because he was convinced that other ways of using computers could make progress in this area. Minsky also mentioned that too much AI research nowadays only wants to do the most popular things and only publishes successful results. He believes that AI can become science because previous scholars not only published those successful results, but also published those that failed;

Oliver Selfridge specifically mentioned that research in related fields (before or after the 1956 summer project) made important contributions to the promotion of AI as a research field. The improvement of language and machine development is its essential reason. He proposed to pay tribute to many early pioneer scientists, such as JCR Licklider who developed the time-sharing theory, Nat Rochester who designed the IBM computer, and Frank Rosenblatt who has been working on perceptron research;

Trenchard More was sent to the summer program by the University of Rochester for two weeks. Some of the best notes on the AI ​​project were recorded by him, although ironically he admitted that he never liked "artificial" or "intelligence" as the name of this research field;

Ray Solomonoff said that the reason why he went to this summer program is to persuade everyone about the importance of machine learning. During the project, he learned a lot about the Turing Machine and affected his future work.

As a result, the 1956 summer research project was not satisfactory in some respects. Participants in the research project have different time of arrival and each is dedicated to its own project. Therefore, this research project cannot be regarded as a meeting in the ordinary sense. This area of ​​research does not reach a consensus on the basic theoretical level, especially the lack of a unified definition of the basic theory of learning. The development of AI research is not based on the consistency of methods, problem choices, or common theories. Instead, researchers have a common vision—computers can perform intelligent tasks. In the 1956 General Assembly proposal, the vision was boldly described: "Our research is based on the conjecture that every aspect of (human) learning or any feature of intelligence is in principle Can be accurately described and can be used by machines to simulate learning and intelligence. Our research is based on this conjecture."

Although in the past 50 years, the AI ​​research field has achieved many successes, there are still countless obvious differences in this field. Often there are different areas of research that are unwilling to cooperate. Researchers use different research methods. They still lack basic theories about intelligence or learning unity, so that the research in this field can maintain consistency and achieve simultaneous development. (Editor's note: The situation has changed in the past decade, and the method represented by deep learning has become the mainstream of artificial intelligence. Despite this, the academic community is still looking forward to other theoretical breakthroughs in the area of ​​artificial intelligence. It is undeniable that The Dartmouth Conference in 1956 was one of the most important historical milestones in the field of artificial intelligence and still guides the direction of future research in artificial intelligence.)

This article was compiled by Lei Feng Network (search "Lei Feng Network" public number concerned), refused to reprint without permission!