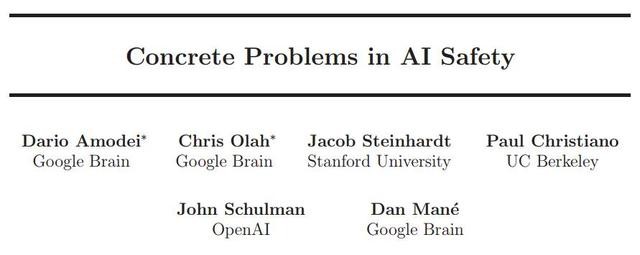

Is artificial intelligence good or summoning demons? Today, Google, which is heavily invested in artificial intelligence, is trying to get out of the middle. Google Brain, Stanford, Berkeley, and OpenAI researchers have co-authored and published a new paper that first describes five issues that researchers must study to make future smart software more secure. If most of the previous studies were hypothetical and inferential, then this paper suggests that the debate about the safety of artificial intelligence can be more concrete and constructive.

Today, new papers from Google Brain, Stanford, Berkeley and OpenAI researchers have met with you. For the first time, the article explores five issues that researchers must study in order to make future smart software more secure. One of the authors, Google researcher Chris Olah, said that most of the previous research was hypothetical and inferential, but we believe that anchoring attention to real machine learning research, to develop practical solutions to create security A reliable artificial intelligence system is essential.

Previously Google has promised to ensure that artificial intelligence software does not cause unintended consequences. Google's first related paper, from Deep Mind. Demis Hassabis also convened an ethics committee to consider the possible negative aspects of artificial intelligence, but did not publish a list of committees.

Oren Etzioni of the Allen Institute of Artificial Intelligence welcomes the solutions listed in Google's new paper. Previously, he had criticized the discussion of artificial intelligence risk theory as too abstract. He said that the various situations listed by Google are specific enough to allow real research, even if we still don't know whether these experiments are really useful. "This is the right person to ask the right question. As for the correct answer, it will be revealed by time."

The following is the main content of this paper:

Summary

Rapid advances in machine learning and artificial intelligence (AI) have caused widespread concern about the potential impact of artificial intelligence on society. In this paper, we discuss such a potential impact: the problem of accidents in machine learning systems, specifically defined as unintentional harmful behavior caused by poor design of real-world artificial intelligence systems. We present a list of five practical research questions related to the risk of accidents. Their classification is based on whether the problem has an incorrect objective function ("avoid side effects" and "avoid reward hacking"). The cost of frequently evaluating the objective function is too high. ("Extensible supervision", or bad behavior in the learning process ("security exploration" and "distribution change"). We also reviewed previous work in these areas and suggested focusing on frontier artificial intelligence systems. The research direction. Finally, we considered such a high-level question: how to think most effectively about the future security of artificial intelligence.

Lead

In the past few years, artificial intelligence has developed rapidly and has made great strides in many areas such as games, medicine, economics, science, and transportation, but it has also emerged in security, privacy, fairness, economics, and military applications. Worried.

The author of this paper believes that artificial intelligence technology is likely to bring overall disruptive benefits to humans, but we also believe that it is worthwhile to take seriously the risks and challenges it may bring. We strongly support research in privacy, security, economics, and politics, but this paper focuses on another issue that we believe is related to the social impact of artificial intelligence: the problem of accidents in machine learning systems. The accident here is defined as the harmful behavior that the machine learning system may unintentionally produce when we specify the wrong objective function. There are no implementation errors related to the learning process or other machine learning.

With the advancement of artificial intelligence capabilities and the growing importance of artificial intelligence systems in social functions, we anticipate that the challenges and challenges discussed in this paper will become increasingly important. The more successful the artificial intelligence and machine learning communities are in predicting and understanding these challenges, the more successful we can be in developing increasingly useful and important artificial intelligence systems.

2. Overview of research questions

In a broad sense, an "accident" can be described as a situation in which a particular goal or task that a human designer thinks fails in the actual design or implementation of the system, and ultimately leads to some harmful outcome. We can classify the security of an artificial intelligence system based on where it went wrong.

First, when the designer defines the wrong objective function, such as maximizing the objective function that causes harmful results, we have the problem of "bad side effects (Section 3)" and "reward hacking behavior (Section 4). "Bad side effects" are usually caused by designers ignoring (and often ignoring a lot) other factors in the environment when designing to achieve a particular goal in an environment. "Reward hacking" is because the designer writes a "simple" objective function in order to maximize the use of the system, but the system abuses the designer's intention (ie, the objective function may be tricked).

MTU High Voltage Generators with MTU Diesel Engine, HV AC Generator

·Engine and alternator shall be mounted on a same frame steel skid.

·Small size, low weight, easy to operating, installation and maintenance.·World most famous brand diesel engine: MTU engine

·World famous brand High Voltage AC alternator: Stamford, Leroy Somer, Marathon, Faraday, etc

·Advanced and reliable controller: Auto start, AMF & Remote control by PC with RS232/485

·Full range protect function and alarm shutdown feature.

·Comply with ISO8528 national standard and ISO9001 quality standard.

. Voltage: 3kV, 3.3kV, 6kV, 6.3kV, 6.6kV, 10kV, 10.5kV, 11kV,13.8kV

Mtu Hv Genset,Mtu Hv Generator,,Mtu High Voltage Genset,Mtu High Voltage Generator

Guangdong Superwatt Power Equipment Co., Ltd , https://www.swtgenset.com